Chapter 4 Descriptive statistics and data manipulation

Now that we are familiar with some R objects and know how to import data, it is time to write some

code. In this chapter, we are going to compute descriptive statistics for a single dataset, but

also for a list of datasets later in the chapter. However, I will not give a list of functions to

compute descriptive statistics; if you need a specific function you can find easily in the Help

pane in Rstudio or using any modern internet search engine. What I will do is show you a workflow

that allows you to compute the descripitive statisics you need fast. R has a lot of built-in

functions for descriptive statistics; however, if you want to compute statistics for different

sub-groups, some more complex manipulations are needed. At least this was true in the past.

Nowadays, thanks to the packages from the {tidyverse}, it is very easy and fast to compute

descriptive statistics by any stratifying variable(s). The package we are going to use for this is

called {dplyr}. {dplyr} contains a lot of functions that make manipulating data and computing

descriptive statistics very easy. To make things easier for now, we are going to use example data

included with {dplyr}. So no need to import an external dataset; this does not change anything to

the example that we are going to study here; the source of the data does not matter for this. Using

{dplyr} is possible only if the data you are working with is already in a useful shape. When data

is more messy, you will need to first manipulate it to bring it a tidy format. For this, we will

use {tidyr}, which is very useful package to reshape data and to do advanced cleaning of your

data. All these tidyverse functions are also called verbs. However, before getting to know these

verbs, let’s do an analysis using standard, or base R functions. This will be the benchmark

against which we are going to measure a {tidyverse} workflow.

4.1 A data exploration exercice using base R

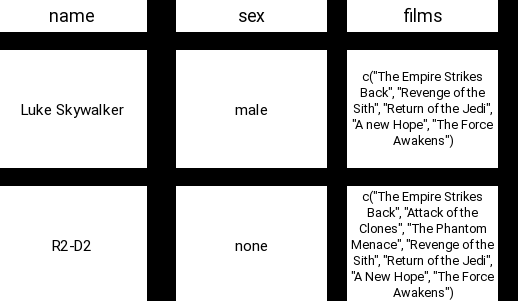

Let’s first load the starwars data set, included in the {dplyr} package:

library(dplyr)

data(starwars)Let’s first take a look at the data:

head(starwars)## # A tibble: 6 × 14

## name height mass hair_…¹ skin_…² eye_c…³ birth…⁴ sex gender homew…⁵

## <chr> <int> <dbl> <chr> <chr> <chr> <dbl> <chr> <chr> <chr>

## 1 Luke Skywal… 172 77 blond fair blue 19 male mascu… Tatooi…

## 2 C-3PO 167 75 <NA> gold yellow 112 none mascu… Tatooi…

## 3 R2-D2 96 32 <NA> white,… red 33 none mascu… Naboo

## 4 Darth Vader 202 136 none white yellow 41.9 male mascu… Tatooi…

## 5 Leia Organa 150 49 brown light brown 19 fema… femin… Aldera…

## 6 Owen Lars 178 120 brown,… light blue 52 male mascu… Tatooi…

## # … with 4 more variables: species <chr>, films <list>, vehicles <list>,

## # starships <list>, and abbreviated variable names ¹hair_color, ²skin_color,

## # ³eye_color, ⁴birth_year, ⁵homeworldThis data contains information on Star Wars characters. The first question you have to answer is to find the average height of the characters:

mean(starwars$height)## [1] NAAs discussed in Chapter 2, $ allows you to access columns of a data.frame objects.

Because there are NA values in the data, the result is also NA. To get the result, you need to

add an option to mean():

mean(starwars$height, na.rm = TRUE)## [1] 174.358Let’s also take a look at the standard deviation:

sd(starwars$height, na.rm = TRUE)## [1] 34.77043It might be more informative to compute these two statistics by sex, so for this, we are going

to use aggregate():

aggregate(starwars$height,

by = list(sex = starwars$sex),

mean)## sex x

## 1 female NA

## 2 hermaphroditic 175

## 3 male NA

## 4 none NAOh, shoot! Most groups have missing values in them, so we get NA back. We need to use na.rm = TRUE

just like before. Thankfully, it is possible to pass this option to mean() inside aggregate() as well:

aggregate(starwars$height,

by = list(sex = starwars$sex),

mean, na.rm = TRUE)## sex x

## 1 female 169.2667

## 2 hermaphroditic 175.0000

## 3 male 179.1053

## 4 none 131.2000Later in the book, we are also going to see how to define our own functions (with the default options that

are useful to us), and this will also help in this sort of situation.

Even though we can use na.rm = TRUE, let’s also use subset() to filter out the NA values beforehand:

starwars_no_nas <- subset(starwars,

!is.na(height))

aggregate(starwars_no_nas$height,

by = list(sex = starwars_no_nas$sex),

mean)## sex x

## 1 female 169.2667

## 2 hermaphroditic 175.0000

## 3 male 179.1053

## 4 none 131.2000(aggregate() also has a subset = option, but I prefer to explicitely subset the data set with subset()).

Even if you are not familiar with aggregate(), I believe the above lines are quite

self-explanatory. You need to provide aggregate() with 3 things; the variable you want to

summarize (or only the data frame, if you want to summarize all variables), a list of grouping

variables and then the function that will be applied to each subgroup. And by the way, to test for

NA, one uses the function is.na() not something like species == "NA" or anything like that.

!is.na() does the opposite (! reverses booleans, so !TRUE becomes FALSE and vice-versa).

You can easily add another grouping variable:

aggregate(starwars_no_nas$height,

by = list(Sex = starwars_no_nas$sex,

`Hair color` = starwars_no_nas$hair_color),

mean)## Sex Hair color x

## 1 female auburn 150.0000

## 2 male auburn, grey 180.0000

## 3 male auburn, white 182.0000

## 4 female black 166.3333

## 5 male black 176.2500

## 6 male blond 176.6667

## 7 female blonde 168.0000

## 8 female brown 160.4000

## 9 male brown 182.6667

## 10 male brown, grey 178.0000

## 11 male grey 170.0000

## 12 female none 188.2500

## 13 male none 182.2414

## 14 none none 148.0000

## 15 female white 167.0000

## 16 male white 152.3333or use another function:

aggregate(starwars_no_nas$height,

by = list(Sex = starwars_no_nas$sex),

sd)## Sex x

## 1 female 15.32256

## 2 hermaphroditic NA

## 3 male 36.01075

## 4 none 49.14977(let’s ignore the NAs). It is important to note that aggregate() returns a data.frame object.

You can only give one function to aggregate(), so if you need the mean and the standard deviation of height,

you must do it in two steps.

Since R 4.1, a new infix operator |> has been introduced, which is really handy for writing the kind of

code we’ve been looking at in this chapter. |> is also called a pipe, or the base pipe to distinguish

it from another pipe that we’ll discuss in the next section. For now, let’s learn about |>.

Consider the following:

10 |> sqrt()## [1] 3.162278This computes sqrt(10); so what |> does, is pass the left hand side (10, in the example above) to the

right hand side (sqrt()). Using |> might seem more complicated and verbose than not using it, but you

will see in a bit why it can be useful. The next function I would like to introduce at this point is with().

with() makes it possible to apply functions on data.frame columns without having to write $ all the time.

For example, consider this:

mean(starwars$height, na.rm = TRUE)## [1] 174.358with(starwars,

mean(height, na.rm = TRUE))## [1] 174.358The advantage of using with() is that we can directly reference height without using $. Here again, this

is more verbose than simply using $… so why bother with it? It turns out that by combining |> and with(),

we can write very clean and concise code. Let’s go back to a previous example to illustrate this idea:

starwars_no_nas <- subset(starwars,

!is.na(height))

aggregate(starwars_no_nas$height,

by = list(sex = starwars_no_nas$sex),

mean)## sex x

## 1 female 169.2667

## 2 hermaphroditic 175.0000

## 3 male 179.1053

## 4 none 131.2000First, we created a new dataset where we filtered out rows where height is NA. This dataset is useless otherwise,

but we need it for the next part, where we actually do what we want (computing the average height by sex).

Using |> and with(), we can write this in one go:

starwars |>

subset(!is.na(sex)) |>

with(aggregate(height,

by = list(Species = species,

Sex = sex),

mean))## Species Sex x

## 1 Clawdite female 168.0000

## 2 Human female NA

## 3 Kaminoan female 213.0000

## 4 Mirialan female 168.0000

## 5 Tholothian female 184.0000

## 6 Togruta female 178.0000

## 7 Twi'lek female 178.0000

## 8 Hutt hermaphroditic 175.0000

## 9 Aleena male 79.0000

## 10 Besalisk male 198.0000

## 11 Cerean male 198.0000

## 12 Chagrian male 196.0000

## 13 Dug male 112.0000

## 14 Ewok male 88.0000

## 15 Geonosian male 183.0000

## 16 Gungan male 208.6667

## 17 Human male NA

## 18 Iktotchi male 188.0000

## 19 Kaleesh male 216.0000

## 20 Kaminoan male 229.0000

## 21 Kel Dor male 188.0000

## 22 Mon Calamari male 180.0000

## 23 Muun male 191.0000

## 24 Nautolan male 196.0000

## 25 Neimodian male 191.0000

## 26 Pau'an male 206.0000

## 27 Quermian male 264.0000

## 28 Rodian male 173.0000

## 29 Skakoan male 193.0000

## 30 Sullustan male 160.0000

## 31 Toong male 163.0000

## 32 Toydarian male 137.0000

## 33 Trandoshan male 190.0000

## 34 Twi'lek male 180.0000

## 35 Vulptereen male 94.0000

## 36 Wookiee male 231.0000

## 37 Xexto male 122.0000

## 38 Yoda's species male 66.0000

## 39 Zabrak male 173.0000

## 40 Droid none NASo let’s unpack this. In the first two rows, using |>, we pass the starwars data.frame to subset():

starwars |>

subset(!is.na(sex))## # A tibble: 83 × 14

## name height mass hair_…¹ skin_…² eye_c…³ birth…⁴ sex gender homew…⁵

## <chr> <int> <dbl> <chr> <chr> <chr> <dbl> <chr> <chr> <chr>

## 1 Luke Skywa… 172 77 blond fair blue 19 male mascu… Tatooi…

## 2 C-3PO 167 75 <NA> gold yellow 112 none mascu… Tatooi…

## 3 R2-D2 96 32 <NA> white,… red 33 none mascu… Naboo

## 4 Darth Vader 202 136 none white yellow 41.9 male mascu… Tatooi…

## 5 Leia Organa 150 49 brown light brown 19 fema… femin… Aldera…

## 6 Owen Lars 178 120 brown,… light blue 52 male mascu… Tatooi…

## 7 Beru White… 165 75 brown light blue 47 fema… femin… Tatooi…

## 8 R5-D4 97 32 <NA> white,… red NA none mascu… Tatooi…

## 9 Biggs Dark… 183 84 black light brown 24 male mascu… Tatooi…

## 10 Obi-Wan Ke… 182 77 auburn… fair blue-g… 57 male mascu… Stewjon

## # … with 73 more rows, 4 more variables: species <chr>, films <list>,

## # vehicles <list>, starships <list>, and abbreviated variable names

## # ¹hair_color, ²skin_color, ³eye_color, ⁴birth_year, ⁵homeworldas I explained before, this is exactly the same as subset(starwars, !is.na(sex)). Then, we pass the result of

subset() to the next function, with(). The first argument of with() must be a data.frame, and this is exactly

what subset() returns! So now the output of subset() is passed down to with(), which makes it now possible

to reference the columns of the data.frame in aggregate() directly. If you have a hard time understanding what

is going on, you can use quote() to see what’s going on. quote() returns an expression with evaluating it:

quote(log(10))## log(10)Why am I bring this up? Well, since a |> f() is exactly equal to f(a), quoting the code above will return

an expression with |>. For instance:

quote(10 |> log())## log(10)So let’s quote the big block of code from above:

quote(

starwars |>

subset(!is.na(sex)) |>

with(aggregate(height,

by = list(Species = species,

Sex = sex),

mean))

)## with(subset(starwars, !is.na(sex)), aggregate(height, by = list(Species = species,

## Sex = sex), mean))I think now you see why using |> makes code much clearer; the nested expression you would need to write otherwise

is much less readable, unless you define intermediate objects. And without with(), this is what you

would need to write:

b <- subset(starwars, !is.na(height))

aggregate(b$height, by = list(Species = b$species, Sex = b$sex), mean)To finish this section, let’s say that you wanted to have the average height and mass by sex. In this case

you need to specify the columns in aggregate() with cbind() (let’s use na.rm = TRUE again instead of

subset()ing the data beforehand):

starwars |>

with(aggregate(cbind(height, mass),

by = list(Sex = sex),

FUN = mean, na.rm = TRUE))## Sex height mass

## 1 female 169.2667 54.68889

## 2 hermaphroditic 175.0000 1358.00000

## 3 male 179.1053 81.00455

## 4 none 131.2000 69.75000Let’s now continue with some more advanced operations using this fake dataset:

survey_data_base <- as.data.frame(

tibble::tribble(

~id, ~var1, ~var2, ~var3,

1, 1, 0.2, 0.3,

2, 1.4, 1.9, 4.1,

3, 0.1, 2.8, 8.9,

4, 1.7, 1.9, 7.6

)

)survey_data_base## id var1 var2 var3

## 1 1 1.0 0.2 0.3

## 2 2 1.4 1.9 4.1

## 3 3 0.1 2.8 8.9

## 4 4 1.7 1.9 7.6Depending on what you want to do with this data, it is not in the right shape. For example, it

would not be possible to simply compute the average of var1, var2 and var3 for each id.

This is because this would require running mean() by row, but this is not very easy. This is

because R is not suited to row-based workflows. Well I’m lying a little bit here, it turns here

that R comes with a rowMeans() function. So this would work:

survey_data_base |>

transform(mean_id = rowMeans(cbind(var1, var2, var3))) #transform adds a column to a data.frame## id var1 var2 var3 mean_id

## 1 1 1.0 0.2 0.3 0.500000

## 2 2 1.4 1.9 4.1 2.466667

## 3 3 0.1 2.8 8.9 3.933333

## 4 4 1.7 1.9 7.6 3.733333But there is no rowSD() or rowMax(), etc… so it is much better to reshape the data and put it in a

format that gives us maximum flexibility. To reshape the data, we’ll be using the aptly-called reshape() command:

survey_data_long <- reshape(survey_data_base,

varying = list(2:4), v.names = "variable", direction = "long")We can now easily compute the average of variable for each id:

aggregate(survey_data_long$variable,

by = list(Id = survey_data_long$id),

mean)## Id x

## 1 1 0.500000

## 2 2 2.466667

## 3 3 3.933333

## 4 4 3.733333or any other variable:

aggregate(survey_data_long$variable,

by = list(Id = survey_data_long$id),

max)## Id x

## 1 1 1.0

## 2 2 4.1

## 3 3 8.9

## 4 4 7.6As you can see, R comes with very powerful functions right out of the box, ready to use. When I was

studying, unfortunately, my professors had been brought up on FORTRAN loops, so we had to do to all

this using loops (not reshaping, thankfully), which was not so easy.

Now that we have seen how base R works, let’s redo the analysis using {tidyverse} verbs.

The {tidyverse} provides many more functions, each of them doing only one single thing. You will

shortly see why this is quite important; by focusing on just one task, and by focusing on the data frame

as the central object, it becomes possible to build really complex workflows, piece by piece,

very easily.

But before deep diving into the {tidyverse}, let’s take a moment to discuss about another infix

operator, %>%.

4.2 Smoking is bad for you, but pipes are your friend

The title of this section might sound weird at first, but by the end of it, you’ll get this (terrible) pun.

You probably know the following painting by René Magritte, La trahison des images:

It turns out there’s an R package from the tidyverse that is called magrittr. What does this

package do? This package introduced pipes to R, way before |> in R 4.1. Pipes are a concept

from the Unix operating system; if you’re using a GNU+Linux distribution or macOS, you’re basically

using a modern unix (that’s an oversimplification, but I’m an economist by training, and

outrageously oversimplifying things is what we do, deal with it). The magrittr pipe is written as

%>%. Just like |>, %>% takes the left hand side to feed it as the first argument of the

function in the right hand side. Try the following:

library(magrittr)16 %>% sqrt## [1] 4You can chain multiple functions, as you can with |>:

16 %>%

sqrt %>%

log## [1] 1.386294But unlike with |>, you can omit (). %>% also has other features. For example, you can

pipe things to other infix operators. For example, +. You can use + as usual:

2 + 12## [1] 14Or as a prefix operator:

`+`(2, 12)## [1] 14You can use this notation with %>%:

16 %>% sqrt %>% `+`(18)## [1] 22This also works using |> since R version 4.2, but only if you use the _ pipe placeholder:

16 |> sqrt() |> `+`(x = _, 18)## [1] 22The output of 16 (16) got fed to sqrt(), and the output of sqrt(16) (4) got fed to +(18)

(so we got +(4, 18) = 22). Without %>% you’d write the line just above like this:

sqrt(16) + 18## [1] 22Just like before, with |>, this might seem overly complicated, but using these pipes will

make our code much more readable. I’m sure you’ll be convinced by the end of this chapter.

%>% is not the only pipe operator in magrittr. There’s %T%, %<>% and %$%. All have their

uses, but are basically shortcuts to some common tasks with %>% plus another function. Which

means that you can live without them, and because of this, I will not discuss them.

4.3 The {tidyverse}’s enfant prodige: {dplyr}

The best way to get started with the tidyverse packages is to get to know {dplyr}. {dplyr}

provides a lot of very useful functions that makes it very easy to get discriptive statistics or

add new columns to your data.

4.3.1 A first taste of data manipulation with {dplyr}

This section will walk you through a typical analysis using {dplyr} funcitons. Just go with it; I

will give more details in the next sections.

First, let’s load {dplyr} and the included starwars dataset. Let’s also take a look at the

first 5 lines of the dataset:

library(dplyr)

data(starwars)

head(starwars)## # A tibble: 6 × 14

## name height mass hair_…¹ skin_…² eye_c…³ birth…⁴ sex gender homew…⁵

## <chr> <int> <dbl> <chr> <chr> <chr> <dbl> <chr> <chr> <chr>

## 1 Luke Skywal… 172 77 blond fair blue 19 male mascu… Tatooi…

## 2 C-3PO 167 75 <NA> gold yellow 112 none mascu… Tatooi…

## 3 R2-D2 96 32 <NA> white,… red 33 none mascu… Naboo

## 4 Darth Vader 202 136 none white yellow 41.9 male mascu… Tatooi…

## 5 Leia Organa 150 49 brown light brown 19 fema… femin… Aldera…

## 6 Owen Lars 178 120 brown,… light blue 52 male mascu… Tatooi…

## # … with 4 more variables: species <chr>, films <list>, vehicles <list>,

## # starships <list>, and abbreviated variable names ¹hair_color, ²skin_color,

## # ³eye_color, ⁴birth_year, ⁵homeworlddata(starwars) loads the example dataset called starwars that is included in the package

{dplyr}. As I said earlier, this is just an example; you could have loaded an external dataset,

from a .csv file for instance. This does not matter for what comes next.

Like we saw earlier, R includes a lot of functions for descriptive statistics, such as mean(),

sd(), cov(), and many more. What {dplyr} brings to the table is a grammar of data

manipulation that makes it very easy to apply descriptive statistics functions, or any other,

very easily.

Just like before, we are going to compute the average height by sex:

starwars %>%

group_by(sex) %>%

summarise(mean_height = mean(height, na.rm = TRUE))## # A tibble: 5 × 2

## sex mean_height

## <chr> <dbl>

## 1 female 169.

## 2 hermaphroditic 175

## 3 male 179.

## 4 none 131.

## 5 <NA> 181.The very nice thing about using %>% and {dplyr} verbs/functions, is that this is really

readable. The above three lines can be translated like so in English:

Take the starwars dataset, then group by sex, then compute the mean height (for each subgroup) by omitting missing values.

%>% can be translated by “then”. Without %>% you would need to change the code to:

summarise(group_by(starwars, sex), mean(height, na.rm = TRUE))## # A tibble: 5 × 2

## sex `mean(height, na.rm = TRUE)`

## <chr> <dbl>

## 1 female 169.

## 2 hermaphroditic 175

## 3 male 179.

## 4 none 131.

## 5 <NA> 181.Unlike with the base approach, each function does only one thing. With the base function

aggregate() was used to also define the subgroups. This is not the case with {dplyr}; one

function to create the groups (group_by()) and then one function to compute the summaries

(summarise()). Also, group_by() creates a specific subgroup for individuals where sex is

missing. This is the last line in the data frame, where sex is NA. Another nice thing is that

you can specify the column containing the average height. I chose to name it mean_height.

Now, let’s suppose that we want to filter some data first:

starwars %>%

filter(gender == "masculine") %>%

group_by(sex) %>%

summarise(mean_height = mean(height, na.rm = TRUE))## # A tibble: 3 × 2

## sex mean_height

## <chr> <dbl>

## 1 hermaphroditic 175

## 2 male 179.

## 3 none 140Again, the %>% makes the above lines of code very easy to read. Without it, one would need to

write:

summarise(group_by(filter(starwars, gender == "masculine"), sex), mean(height, na.rm = TRUE))## # A tibble: 3 × 2

## sex `mean(height, na.rm = TRUE)`

## <chr> <dbl>

## 1 hermaphroditic 175

## 2 male 179.

## 3 none 140I think you agree with me that this is not very readable. One way to make it more readable would be to save intermediary variables:

filtered_data <- filter(starwars, gender == "masculine")

grouped_data <- group_by(filter(starwars, gender == "masculine"), sex)

summarise(grouped_data, mean(height))## # A tibble: 3 × 2

## sex `mean(height)`

## <chr> <dbl>

## 1 hermaphroditic 175

## 2 male NA

## 3 none NABut this can get very tedious. Once you’re used to %>%, you won’t go back to not use it.

Before continuing and to make things clearer; filter(), group_by() and summarise() are

functions that are included in {dplyr}. %>% is actually a function from {magrittr}, but this

package gets loaded on the fly when you load {dplyr}, so you do not need to worry about it.

The result of all these operations that use {dplyr} functions are actually other datasets, or

tibbles. This means that you can save them in variable, or write them to disk, and then work with

these as any other datasets.

mean_height <- starwars %>%

group_by(sex) %>%

summarise(mean(height))

class(mean_height)## [1] "tbl_df" "tbl" "data.frame"head(mean_height)## # A tibble: 5 × 2

## sex `mean(height)`

## <chr> <dbl>

## 1 female NA

## 2 hermaphroditic 175

## 3 male NA

## 4 none NA

## 5 <NA> NAYou could then write this data to disk using rio::export() for instance. If you need more than

the mean of the height, you can keep adding as many functions as needed (another advantage over

aggregate():

summary_table <- starwars %>%

group_by(sex) %>%

summarise(mean_height = mean(height, na.rm = TRUE),

var_height = var(height, na.rm = TRUE),

n_obs = n())

summary_table## # A tibble: 5 × 4

## sex mean_height var_height n_obs

## <chr> <dbl> <dbl> <int>

## 1 female 169. 235. 16

## 2 hermaphroditic 175 NA 1

## 3 male 179. 1297. 60

## 4 none 131. 2416. 6

## 5 <NA> 181. 8.33 4I’ve added more functions, namely var(), to get the variance of height, and n(), which

is a function from {dplyr}, not base R, to get the number of observations. This is quite useful,

because we see that there is a group with only one individual. Let’s focus on the

sexes for which we have more than 1 individual. Since we save all the previous operations (which

produce a tibble) in a variable, we can keep going from there:

summary_table2 <- summary_table %>%

filter(n_obs > 1)

summary_table2## # A tibble: 4 × 4

## sex mean_height var_height n_obs

## <chr> <dbl> <dbl> <int>

## 1 female 169. 235. 16

## 2 male 179. 1297. 60

## 3 none 131. 2416. 6

## 4 <NA> 181. 8.33 4As mentioned before, there’s a lot of NAs; this is because by default, mean() and var()

return NA if even one single observation is NA. This is good, because it forces you to look at

the data to see what is going on. If you would get a number, even if there were NAs you could

very easily miss these missing values. It is better for functions to fail early and often than the

opposite. This is way we keep using na.rm = TRUE for mean() and var().

Now let’s actually take a look at the rows where sex is NA:

starwars %>%

filter(is.na(sex))## # A tibble: 4 × 14

## name height mass hair_…¹ skin_…² eye_c…³ birth…⁴ sex gender homew…⁵

## <chr> <int> <dbl> <chr> <chr> <chr> <dbl> <chr> <chr> <chr>

## 1 Ric Olié 183 NA brown fair blue NA <NA> <NA> Naboo

## 2 Quarsh Pana… 183 NA black dark brown 62 <NA> <NA> Naboo

## 3 Sly Moore 178 48 none pale white NA <NA> <NA> Umbara

## 4 Captain Pha… NA NA unknown unknown unknown NA <NA> <NA> <NA>

## # … with 4 more variables: species <chr>, films <list>, vehicles <list>,

## # starships <list>, and abbreviated variable names ¹hair_color, ²skin_color,

## # ³eye_color, ⁴birth_year, ⁵homeworldThere’s only 4 rows where sex is NA. Let’s ignore them:

starwars %>%

filter(!is.na(sex)) %>%

group_by(sex) %>%

summarise(ave_height = mean(height, na.rm = TRUE),

var_height = var(height, na.rm = TRUE),

n_obs = n()) %>%

filter(n_obs > 1)## # A tibble: 3 × 4

## sex ave_height var_height n_obs

## <chr> <dbl> <dbl> <int>

## 1 female 169. 235. 16

## 2 male 179. 1297. 60

## 3 none 131. 2416. 6And why not compute the same table, but first add another stratifying variable?

starwars %>%

filter(!is.na(sex)) %>%

group_by(sex, eye_color) %>%

summarise(ave_height = mean(height, na.rm = TRUE),

var_height = var(height, na.rm = TRUE),

n_obs = n()) %>%

filter(n_obs > 1)## `summarise()` has grouped output by 'sex'. You can override using the `.groups`

## argument.## # A tibble: 12 × 5

## # Groups: sex [3]

## sex eye_color ave_height var_height n_obs

## <chr> <chr> <dbl> <dbl> <int>

## 1 female black 196. 612. 2

## 2 female blue 167 118. 6

## 3 female brown 160 42 5

## 4 female hazel 178 NA 2

## 5 male black 182 1197 7

## 6 male blue 190. 434. 12

## 7 male brown 167. 1663. 15

## 8 male orange 181. 1306. 7

## 9 male red 190. 0.5 2

## 10 male unknown 136 6498 2

## 11 male yellow 180. 2196. 9

## 12 none red 131 3571 3Ok, that’s it for a first taste. We have already discovered some very useful {dplyr} functions,

filter(), group_by() and summarise summarise().

Now, we are going to learn more about these functions in more detail.

4.3.2 Filter the rows of a dataset with filter()

We’re going to use the Gasoline dataset from the plm package, so install that first:

install.packages("plm")Then load the required data:

data(Gasoline, package = "plm")and load dplyr:

library(dplyr)This dataset gives the consumption of gasoline for 18 countries from 1960 to 1978. When you load

the data like this, it is a standard data.frame. {dplyr} functions can be used on standard

data.frame objects, but also on tibbles. tibbles are just like data frame, but with a better

print method (and other niceties). I’ll discuss the {tibble} package later, but for now, let’s

convert the data to a tibble and change its name, and also transform the country column to

lower case:

gasoline <- as_tibble(Gasoline)

gasoline <- gasoline %>%

mutate(country = tolower(country))filter() is pretty straightforward. What if you would like to subset the data to focus on the

year 1969? Simple:

filter(gasoline, year == 1969)## # A tibble: 18 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1969 4.05 -6.15 -0.559 -8.79

## 2 belgium 1969 3.85 -5.86 -0.355 -8.52

## 3 canada 1969 4.86 -5.56 -1.04 -8.10

## 4 denmark 1969 4.17 -5.72 -0.407 -8.47

## 5 france 1969 3.77 -5.84 -0.315 -8.37

## 6 germany 1969 3.90 -5.83 -0.589 -8.44

## 7 greece 1969 4.89 -6.59 -0.180 -10.7

## 8 ireland 1969 4.21 -6.38 -0.272 -8.95

## 9 italy 1969 3.74 -6.28 -0.248 -8.67

## 10 japan 1969 4.52 -6.16 -0.417 -9.61

## 11 netherla 1969 3.99 -5.88 -0.417 -8.63

## 12 norway 1969 4.09 -5.74 -0.338 -8.69

## 13 spain 1969 3.99 -5.60 0.669 -9.72

## 14 sweden 1969 3.99 -7.77 -2.73 -8.20

## 15 switzerl 1969 4.21 -5.91 -0.918 -8.47

## 16 turkey 1969 5.72 -7.39 -0.298 -12.5

## 17 u.k. 1969 3.95 -6.03 -0.383 -8.47

## 18 u.s.a. 1969 4.84 -5.41 -1.22 -7.79Let’s use %>%, since we’re familiar with it now:

gasoline %>%

filter(year == 1969)## # A tibble: 18 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1969 4.05 -6.15 -0.559 -8.79

## 2 belgium 1969 3.85 -5.86 -0.355 -8.52

## 3 canada 1969 4.86 -5.56 -1.04 -8.10

## 4 denmark 1969 4.17 -5.72 -0.407 -8.47

## 5 france 1969 3.77 -5.84 -0.315 -8.37

## 6 germany 1969 3.90 -5.83 -0.589 -8.44

## 7 greece 1969 4.89 -6.59 -0.180 -10.7

## 8 ireland 1969 4.21 -6.38 -0.272 -8.95

## 9 italy 1969 3.74 -6.28 -0.248 -8.67

## 10 japan 1969 4.52 -6.16 -0.417 -9.61

## 11 netherla 1969 3.99 -5.88 -0.417 -8.63

## 12 norway 1969 4.09 -5.74 -0.338 -8.69

## 13 spain 1969 3.99 -5.60 0.669 -9.72

## 14 sweden 1969 3.99 -7.77 -2.73 -8.20

## 15 switzerl 1969 4.21 -5.91 -0.918 -8.47

## 16 turkey 1969 5.72 -7.39 -0.298 -12.5

## 17 u.k. 1969 3.95 -6.03 -0.383 -8.47

## 18 u.s.a. 1969 4.84 -5.41 -1.22 -7.79You can also filter more than just one year, by using the %in% operator:

gasoline %>%

filter(year %in% seq(1969, 1973))## # A tibble: 90 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1969 4.05 -6.15 -0.559 -8.79

## 2 austria 1970 4.08 -6.08 -0.597 -8.73

## 3 austria 1971 4.11 -6.04 -0.654 -8.64

## 4 austria 1972 4.13 -5.98 -0.596 -8.54

## 5 austria 1973 4.20 -5.90 -0.594 -8.49

## 6 belgium 1969 3.85 -5.86 -0.355 -8.52

## 7 belgium 1970 3.87 -5.80 -0.378 -8.45

## 8 belgium 1971 3.87 -5.76 -0.399 -8.41

## 9 belgium 1972 3.91 -5.71 -0.311 -8.36

## 10 belgium 1973 3.90 -5.64 -0.373 -8.31

## # … with 80 more rowsIt is also possible use between(), a helper function:

gasoline %>%

filter(between(year, 1969, 1973))## # A tibble: 90 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1969 4.05 -6.15 -0.559 -8.79

## 2 austria 1970 4.08 -6.08 -0.597 -8.73

## 3 austria 1971 4.11 -6.04 -0.654 -8.64

## 4 austria 1972 4.13 -5.98 -0.596 -8.54

## 5 austria 1973 4.20 -5.90 -0.594 -8.49

## 6 belgium 1969 3.85 -5.86 -0.355 -8.52

## 7 belgium 1970 3.87 -5.80 -0.378 -8.45

## 8 belgium 1971 3.87 -5.76 -0.399 -8.41

## 9 belgium 1972 3.91 -5.71 -0.311 -8.36

## 10 belgium 1973 3.90 -5.64 -0.373 -8.31

## # … with 80 more rowsTo select non-consecutive years:

gasoline %>%

filter(year %in% c(1969, 1973, 1977))## # A tibble: 54 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1969 4.05 -6.15 -0.559 -8.79

## 2 austria 1973 4.20 -5.90 -0.594 -8.49

## 3 austria 1977 3.93 -5.83 -0.422 -8.25

## 4 belgium 1969 3.85 -5.86 -0.355 -8.52

## 5 belgium 1973 3.90 -5.64 -0.373 -8.31

## 6 belgium 1977 3.85 -5.56 -0.432 -8.14

## 7 canada 1969 4.86 -5.56 -1.04 -8.10

## 8 canada 1973 4.90 -5.41 -1.13 -7.94

## 9 canada 1977 4.81 -5.34 -1.07 -7.77

## 10 denmark 1969 4.17 -5.72 -0.407 -8.47

## # … with 44 more rows%in% tests if an object is part of a set.

4.3.3 Select columns with select()

While filter() allows you to keep or discard rows of data, select() allows you to keep or

discard entire columns. To keep columns:

gasoline %>%

select(country, year, lrpmg)## # A tibble: 342 × 3

## country year lrpmg

## <chr> <int> <dbl>

## 1 austria 1960 -0.335

## 2 austria 1961 -0.351

## 3 austria 1962 -0.380

## 4 austria 1963 -0.414

## 5 austria 1964 -0.445

## 6 austria 1965 -0.497

## 7 austria 1966 -0.467

## 8 austria 1967 -0.506

## 9 austria 1968 -0.522

## 10 austria 1969 -0.559

## # … with 332 more rowsTo discard them:

gasoline %>%

select(-country, -year, -lrpmg)## # A tibble: 342 × 3

## lgaspcar lincomep lcarpcap

## <dbl> <dbl> <dbl>

## 1 4.17 -6.47 -9.77

## 2 4.10 -6.43 -9.61

## 3 4.07 -6.41 -9.46

## 4 4.06 -6.37 -9.34

## 5 4.04 -6.32 -9.24

## 6 4.03 -6.29 -9.12

## 7 4.05 -6.25 -9.02

## 8 4.05 -6.23 -8.93

## 9 4.05 -6.21 -8.85

## 10 4.05 -6.15 -8.79

## # … with 332 more rowsTo rename them:

gasoline %>%

select(country, date = year, lrpmg)## # A tibble: 342 × 3

## country date lrpmg

## <chr> <int> <dbl>

## 1 austria 1960 -0.335

## 2 austria 1961 -0.351

## 3 austria 1962 -0.380

## 4 austria 1963 -0.414

## 5 austria 1964 -0.445

## 6 austria 1965 -0.497

## 7 austria 1966 -0.467

## 8 austria 1967 -0.506

## 9 austria 1968 -0.522

## 10 austria 1969 -0.559

## # … with 332 more rowsThere’s also rename():

gasoline %>%

rename(date = year)## # A tibble: 342 × 6

## country date lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77

## 2 austria 1961 4.10 -6.43 -0.351 -9.61

## 3 austria 1962 4.07 -6.41 -0.380 -9.46

## 4 austria 1963 4.06 -6.37 -0.414 -9.34

## 5 austria 1964 4.04 -6.32 -0.445 -9.24

## 6 austria 1965 4.03 -6.29 -0.497 -9.12

## 7 austria 1966 4.05 -6.25 -0.467 -9.02

## 8 austria 1967 4.05 -6.23 -0.506 -8.93

## 9 austria 1968 4.05 -6.21 -0.522 -8.85

## 10 austria 1969 4.05 -6.15 -0.559 -8.79

## # … with 332 more rowsrename() does not do any kind of selection, but just renames.

You can also use select() to re-order columns:

gasoline %>%

select(year, country, lrpmg, everything())## # A tibble: 342 × 6

## year country lrpmg lgaspcar lincomep lcarpcap

## <int> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 1960 austria -0.335 4.17 -6.47 -9.77

## 2 1961 austria -0.351 4.10 -6.43 -9.61

## 3 1962 austria -0.380 4.07 -6.41 -9.46

## 4 1963 austria -0.414 4.06 -6.37 -9.34

## 5 1964 austria -0.445 4.04 -6.32 -9.24

## 6 1965 austria -0.497 4.03 -6.29 -9.12

## 7 1966 austria -0.467 4.05 -6.25 -9.02

## 8 1967 austria -0.506 4.05 -6.23 -8.93

## 9 1968 austria -0.522 4.05 -6.21 -8.85

## 10 1969 austria -0.559 4.05 -6.15 -8.79

## # … with 332 more rowseverything() is a helper function, and there’s also starts_with(), and ends_with(). For

example, what if we are only interested in columns whose name start with “l”?

gasoline %>%

select(starts_with("l"))## # A tibble: 342 × 4

## lgaspcar lincomep lrpmg lcarpcap

## <dbl> <dbl> <dbl> <dbl>

## 1 4.17 -6.47 -0.335 -9.77

## 2 4.10 -6.43 -0.351 -9.61

## 3 4.07 -6.41 -0.380 -9.46

## 4 4.06 -6.37 -0.414 -9.34

## 5 4.04 -6.32 -0.445 -9.24

## 6 4.03 -6.29 -0.497 -9.12

## 7 4.05 -6.25 -0.467 -9.02

## 8 4.05 -6.23 -0.506 -8.93

## 9 4.05 -6.21 -0.522 -8.85

## 10 4.05 -6.15 -0.559 -8.79

## # … with 332 more rowsends_with() works in a similar fashion. There is also contains():

gasoline %>%

select(country, year, contains("car"))## # A tibble: 342 × 4

## country year lgaspcar lcarpcap

## <chr> <int> <dbl> <dbl>

## 1 austria 1960 4.17 -9.77

## 2 austria 1961 4.10 -9.61

## 3 austria 1962 4.07 -9.46

## 4 austria 1963 4.06 -9.34

## 5 austria 1964 4.04 -9.24

## 6 austria 1965 4.03 -9.12

## 7 austria 1966 4.05 -9.02

## 8 austria 1967 4.05 -8.93

## 9 austria 1968 4.05 -8.85

## 10 austria 1969 4.05 -8.79

## # … with 332 more rowsYou can read more about these helper functions here, but we’re going to look more into them in a coming section.

Another verb, similar to select(), is pull(). Let’s compare the two:

gasoline %>%

select(lrpmg)## # A tibble: 342 × 1

## lrpmg

## <dbl>

## 1 -0.335

## 2 -0.351

## 3 -0.380

## 4 -0.414

## 5 -0.445

## 6 -0.497

## 7 -0.467

## 8 -0.506

## 9 -0.522

## 10 -0.559

## # … with 332 more rowsgasoline %>%

pull(lrpmg) %>%

head() # using head() because there's 337 elements in total## [1] -0.3345476 -0.3513276 -0.3795177 -0.4142514 -0.4453354 -0.4970607pull(), unlike select(), does not return a tibble, but only the column you want, as a

vector.

4.3.4 Group the observations of your dataset with group_by()

group_by() is a very useful verb; as the name implies, it allows you to create groups and then,

for example, compute descriptive statistics by groups. For example, let’s group our data by

country:

gasoline %>%

group_by(country)## # A tibble: 342 × 6

## # Groups: country [18]

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77

## 2 austria 1961 4.10 -6.43 -0.351 -9.61

## 3 austria 1962 4.07 -6.41 -0.380 -9.46

## 4 austria 1963 4.06 -6.37 -0.414 -9.34

## 5 austria 1964 4.04 -6.32 -0.445 -9.24

## 6 austria 1965 4.03 -6.29 -0.497 -9.12

## 7 austria 1966 4.05 -6.25 -0.467 -9.02

## 8 austria 1967 4.05 -6.23 -0.506 -8.93

## 9 austria 1968 4.05 -6.21 -0.522 -8.85

## 10 austria 1969 4.05 -6.15 -0.559 -8.79

## # … with 332 more rowsIt looks like nothing much happened, but if you look at the second line of the output you can read the following:

## # Groups: country [18]this means that the data is grouped, and every computation you will do now will take these groups into account. It is also possible to group by more than one variable:

gasoline %>%

group_by(country, year)## # A tibble: 342 × 6

## # Groups: country, year [342]

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77

## 2 austria 1961 4.10 -6.43 -0.351 -9.61

## 3 austria 1962 4.07 -6.41 -0.380 -9.46

## 4 austria 1963 4.06 -6.37 -0.414 -9.34

## 5 austria 1964 4.04 -6.32 -0.445 -9.24

## 6 austria 1965 4.03 -6.29 -0.497 -9.12

## 7 austria 1966 4.05 -6.25 -0.467 -9.02

## 8 austria 1967 4.05 -6.23 -0.506 -8.93

## 9 austria 1968 4.05 -6.21 -0.522 -8.85

## 10 austria 1969 4.05 -6.15 -0.559 -8.79

## # … with 332 more rowsand so on. You can then also ungroup:

gasoline %>%

group_by(country, year) %>%

ungroup()## # A tibble: 342 × 6

## country year lgaspcar lincomep lrpmg lcarpcap

## <chr> <int> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77

## 2 austria 1961 4.10 -6.43 -0.351 -9.61

## 3 austria 1962 4.07 -6.41 -0.380 -9.46

## 4 austria 1963 4.06 -6.37 -0.414 -9.34

## 5 austria 1964 4.04 -6.32 -0.445 -9.24

## 6 austria 1965 4.03 -6.29 -0.497 -9.12

## 7 austria 1966 4.05 -6.25 -0.467 -9.02

## 8 austria 1967 4.05 -6.23 -0.506 -8.93

## 9 austria 1968 4.05 -6.21 -0.522 -8.85

## 10 austria 1969 4.05 -6.15 -0.559 -8.79

## # … with 332 more rowsOnce your data is grouped, the operations that will follow will be executed inside each group.

4.3.5 Get summary statistics with summarise()

Ok, now that we have learned the basic verbs, we can start to do more interesting stuff. For example, one might want to compute the average gasoline consumption in each country, for the whole period:

gasoline %>%

group_by(country) %>%

summarise(mean(lgaspcar))## # A tibble: 18 × 2

## country `mean(lgaspcar)`

## <chr> <dbl>

## 1 austria 4.06

## 2 belgium 3.92

## 3 canada 4.86

## 4 denmark 4.19

## 5 france 3.82

## 6 germany 3.89

## 7 greece 4.88

## 8 ireland 4.23

## 9 italy 3.73

## 10 japan 4.70

## 11 netherla 4.08

## 12 norway 4.11

## 13 spain 4.06

## 14 sweden 4.01

## 15 switzerl 4.24

## 16 turkey 5.77

## 17 u.k. 3.98

## 18 u.s.a. 4.82mean() was given as an argument to summarise(), which is a {dplyr} verb. What we get is

another tibble, that contains the variable we used to group, as well as the average per country.

We can also rename this column:

gasoline %>%

group_by(country) %>%

summarise(mean_gaspcar = mean(lgaspcar))## # A tibble: 18 × 2

## country mean_gaspcar

## <chr> <dbl>

## 1 austria 4.06

## 2 belgium 3.92

## 3 canada 4.86

## 4 denmark 4.19

## 5 france 3.82

## 6 germany 3.89

## 7 greece 4.88

## 8 ireland 4.23

## 9 italy 3.73

## 10 japan 4.70

## 11 netherla 4.08

## 12 norway 4.11

## 13 spain 4.06

## 14 sweden 4.01

## 15 switzerl 4.24

## 16 turkey 5.77

## 17 u.k. 3.98

## 18 u.s.a. 4.82and because the output is a tibble, we can continue to use {dplyr} verbs on it:

gasoline %>%

group_by(country) %>%

summarise(mean_gaspcar = mean(lgaspcar)) %>%

filter(country == "france")## # A tibble: 1 × 2

## country mean_gaspcar

## <chr> <dbl>

## 1 france 3.82summarise() is a very useful verb. For example, we can compute several descriptive statistics at once:

gasoline %>%

group_by(country) %>%

summarise(mean_gaspcar = mean(lgaspcar),

sd_gaspcar = sd(lgaspcar),

max_gaspcar = max(lgaspcar),

min_gaspcar = min(lgaspcar))## # A tibble: 18 × 5

## country mean_gaspcar sd_gaspcar max_gaspcar min_gaspcar

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 austria 4.06 0.0693 4.20 3.92

## 2 belgium 3.92 0.103 4.16 3.82

## 3 canada 4.86 0.0262 4.90 4.81

## 4 denmark 4.19 0.158 4.50 4.00

## 5 france 3.82 0.0499 3.91 3.75

## 6 germany 3.89 0.0239 3.93 3.85

## 7 greece 4.88 0.255 5.38 4.48

## 8 ireland 4.23 0.0437 4.33 4.16

## 9 italy 3.73 0.220 4.05 3.38

## 10 japan 4.70 0.684 6.00 3.95

## 11 netherla 4.08 0.286 4.65 3.71

## 12 norway 4.11 0.123 4.44 3.96

## 13 spain 4.06 0.317 4.75 3.62

## 14 sweden 4.01 0.0364 4.07 3.91

## 15 switzerl 4.24 0.102 4.44 4.05

## 16 turkey 5.77 0.329 6.16 5.14

## 17 u.k. 3.98 0.0479 4.10 3.91

## 18 u.s.a. 4.82 0.0219 4.86 4.79Because the output is a tibble, you can save it in a variable of course:

desc_gasoline <- gasoline %>%

group_by(country) %>%

summarise(mean_gaspcar = mean(lgaspcar),

sd_gaspcar = sd(lgaspcar),

max_gaspcar = max(lgaspcar),

min_gaspcar = min(lgaspcar))And then you can answer questions such as, which country has the maximum average gasoline consumption?:

desc_gasoline %>%

filter(max(mean_gaspcar) == mean_gaspcar)## # A tibble: 1 × 5

## country mean_gaspcar sd_gaspcar max_gaspcar min_gaspcar

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 turkey 5.77 0.329 6.16 5.14Turns out it’s Turkey. What about the minimum consumption?

desc_gasoline %>%

filter(min(mean_gaspcar) == mean_gaspcar)## # A tibble: 1 × 5

## country mean_gaspcar sd_gaspcar max_gaspcar min_gaspcar

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 italy 3.73 0.220 4.05 3.38Because the output of {dplyr} verbs is a tibble, it is possible to continue working with it. This

is one shortcoming of using the base summary() function. The object returned by that function is

not very easy to manipulate.

4.3.6 Adding columns with mutate() and transmute()

mutate() adds a column to the tibble, which can contain any transformation of any other

variable:

gasoline %>%

group_by(country) %>%

mutate(n())## # A tibble: 342 × 7

## # Groups: country [18]

## country year lgaspcar lincomep lrpmg lcarpcap `n()`

## <chr> <int> <dbl> <dbl> <dbl> <dbl> <int>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77 19

## 2 austria 1961 4.10 -6.43 -0.351 -9.61 19

## 3 austria 1962 4.07 -6.41 -0.380 -9.46 19

## 4 austria 1963 4.06 -6.37 -0.414 -9.34 19

## 5 austria 1964 4.04 -6.32 -0.445 -9.24 19

## 6 austria 1965 4.03 -6.29 -0.497 -9.12 19

## 7 austria 1966 4.05 -6.25 -0.467 -9.02 19

## 8 austria 1967 4.05 -6.23 -0.506 -8.93 19

## 9 austria 1968 4.05 -6.21 -0.522 -8.85 19

## 10 austria 1969 4.05 -6.15 -0.559 -8.79 19

## # … with 332 more rowsUsing mutate() I’ve added a column that counts how many times the country appears in the tibble,

using n(), another {dplyr} function. There’s also count() and tally(), which we are going to

see further down. It is also possible to rename the column on the fly:

gasoline %>%

group_by(country) %>%

mutate(count = n())## # A tibble: 342 × 7

## # Groups: country [18]

## country year lgaspcar lincomep lrpmg lcarpcap count

## <chr> <int> <dbl> <dbl> <dbl> <dbl> <int>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77 19

## 2 austria 1961 4.10 -6.43 -0.351 -9.61 19

## 3 austria 1962 4.07 -6.41 -0.380 -9.46 19

## 4 austria 1963 4.06 -6.37 -0.414 -9.34 19

## 5 austria 1964 4.04 -6.32 -0.445 -9.24 19

## 6 austria 1965 4.03 -6.29 -0.497 -9.12 19

## 7 austria 1966 4.05 -6.25 -0.467 -9.02 19

## 8 austria 1967 4.05 -6.23 -0.506 -8.93 19

## 9 austria 1968 4.05 -6.21 -0.522 -8.85 19

## 10 austria 1969 4.05 -6.15 -0.559 -8.79 19

## # … with 332 more rowsIt is possible to do any arbitrary operation:

gasoline %>%

group_by(country) %>%

mutate(spam = exp(lgaspcar + lincomep))## # A tibble: 342 × 7

## # Groups: country [18]

## country year lgaspcar lincomep lrpmg lcarpcap spam

## <chr> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 austria 1960 4.17 -6.47 -0.335 -9.77 0.100

## 2 austria 1961 4.10 -6.43 -0.351 -9.61 0.0978

## 3 austria 1962 4.07 -6.41 -0.380 -9.46 0.0969

## 4 austria 1963 4.06 -6.37 -0.414 -9.34 0.0991

## 5 austria 1964 4.04 -6.32 -0.445 -9.24 0.102

## 6 austria 1965 4.03 -6.29 -0.497 -9.12 0.104

## 7 austria 1966 4.05 -6.25 -0.467 -9.02 0.110

## 8 austria 1967 4.05 -6.23 -0.506 -8.93 0.113

## 9 austria 1968 4.05 -6.21 -0.522 -8.85 0.115

## 10 austria 1969 4.05 -6.15 -0.559 -8.79 0.122

## # … with 332 more rowstransmute() is the same as mutate(), but only returns the created variable:

gasoline %>%

group_by(country) %>%

transmute(spam = exp(lgaspcar + lincomep))## # A tibble: 342 × 2

## # Groups: country [18]

## country spam

## <chr> <dbl>

## 1 austria 0.100

## 2 austria 0.0978

## 3 austria 0.0969

## 4 austria 0.0991

## 5 austria 0.102

## 6 austria 0.104

## 7 austria 0.110

## 8 austria 0.113

## 9 austria 0.115

## 10 austria 0.122

## # … with 332 more rows4.3.7 Joining tibbles with full_join(), left_join(), right_join() and all the others

I will end this section on {dplyr} with the very useful verbs: the *_join() verbs. Let’s first

start by loading another dataset from the plm package. SumHes and let’s convert it to tibble

and rename it:

data(SumHes, package = "plm")

pwt <- SumHes %>%

as_tibble() %>%

mutate(country = tolower(country))Let’s take a quick look at the data:

glimpse(pwt)## Rows: 3,250

## Columns: 7

## $ year <int> 1960, 1961, 1962, 1963, 1964, 1965, 1966, 1967, 1968, 1969, 19…

## $ country <chr> "algeria", "algeria", "algeria", "algeria", "algeria", "algeri…

## $ opec <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no…

## $ com <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no…

## $ pop <int> 10800, 11016, 11236, 11460, 11690, 11923, 12267, 12622, 12986,…

## $ gdp <int> 1723, 1599, 1275, 1517, 1589, 1584, 1548, 1600, 1758, 1835, 18…

## $ sr <dbl> 19.9, 21.1, 15.0, 13.9, 10.6, 11.0, 8.3, 11.3, 15.1, 18.2, 19.…We can merge both gasoline and pwt by country and year, as these two variables are common to

both datasets. There are more countries and years in the pwt dataset, so when merging both, and

depending on which function you use, you will either have NA’s for the variables where there is

no match, or rows that will be dropped. Let’s start with full_join:

gas_pwt_full <- gasoline %>%

full_join(pwt, by = c("country", "year"))Let’s see which countries and years are included:

gas_pwt_full %>%

count(country, year)## # A tibble: 3,307 × 3

## country year n

## <chr> <int> <int>

## 1 algeria 1960 1

## 2 algeria 1961 1

## 3 algeria 1962 1

## 4 algeria 1963 1

## 5 algeria 1964 1

## 6 algeria 1965 1

## 7 algeria 1966 1

## 8 algeria 1967 1

## 9 algeria 1968 1

## 10 algeria 1969 1

## # … with 3,297 more rowsAs you see, every country and year was included, but what happened for, say, the U.S.S.R? This country

is in pwt but not in gasoline at all:

gas_pwt_full %>%

filter(country == "u.s.s.r.")## # A tibble: 26 × 11

## country year lgaspcar lincomep lrpmg lcarp…¹ opec com pop gdp sr

## <chr> <int> <dbl> <dbl> <dbl> <dbl> <fct> <fct> <int> <int> <dbl>

## 1 u.s.s.r. 1960 NA NA NA NA no yes 214400 2397 37.9

## 2 u.s.s.r. 1961 NA NA NA NA no yes 217896 2542 39.4

## 3 u.s.s.r. 1962 NA NA NA NA no yes 221449 2656 38.4

## 4 u.s.s.r. 1963 NA NA NA NA no yes 225060 2681 38.4

## 5 u.s.s.r. 1964 NA NA NA NA no yes 227571 2854 39.5

## 6 u.s.s.r. 1965 NA NA NA NA no yes 230109 3049 39.9

## 7 u.s.s.r. 1966 NA NA NA NA no yes 232676 3247 39.9

## 8 u.s.s.r. 1967 NA NA NA NA no yes 235272 3454 40.2

## 9 u.s.s.r. 1968 NA NA NA NA no yes 237896 3730 40.6

## 10 u.s.s.r. 1969 NA NA NA NA no yes 240550 3808 37.9

## # … with 16 more rows, and abbreviated variable name ¹lcarpcapAs you probably guessed, the variables from gasoline that are not included in pwt are filled with

NAs. One could remove all these lines and only keep countries for which these variables are not

NA everywhere with filter(), but there is a simpler solution:

gas_pwt_inner <- gasoline %>%

inner_join(pwt, by = c("country", "year"))Let’s use the tabyl() from the janitor packages which is a very nice alternative to the table()

function from base R:

library(janitor)

gas_pwt_inner %>%

tabyl(country)## country n percent

## austria 19 0.06666667

## belgium 19 0.06666667

## canada 19 0.06666667

## denmark 19 0.06666667

## france 19 0.06666667

## greece 19 0.06666667

## ireland 19 0.06666667

## italy 19 0.06666667

## japan 19 0.06666667

## norway 19 0.06666667

## spain 19 0.06666667

## sweden 19 0.06666667

## turkey 19 0.06666667

## u.k. 19 0.06666667

## u.s.a. 19 0.06666667Only countries with values in both datasets were returned. It’s almost every country from gasoline,

apart from Germany (called “germany west” in pwt and “germany” in gasoline. I left it as is to

provide an example of a country not in pwt). Let’s also look at the variables:

glimpse(gas_pwt_inner)## Rows: 285

## Columns: 11

## $ country <chr> "austria", "austria", "austria", "austria", "austria", "austr…

## $ year <int> 1960, 1961, 1962, 1963, 1964, 1965, 1966, 1967, 1968, 1969, 1…

## $ lgaspcar <dbl> 4.173244, 4.100989, 4.073177, 4.059509, 4.037689, 4.033983, 4…

## $ lincomep <dbl> -6.474277, -6.426006, -6.407308, -6.370679, -6.322247, -6.294…

## $ lrpmg <dbl> -0.3345476, -0.3513276, -0.3795177, -0.4142514, -0.4453354, -…

## $ lcarpcap <dbl> -9.766840, -9.608622, -9.457257, -9.343155, -9.237739, -9.123…

## $ opec <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, n…

## $ com <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, n…

## $ pop <int> 7048, 7087, 7130, 7172, 7215, 7255, 7308, 7338, 7362, 7384, 7…

## $ gdp <int> 5143, 5388, 5481, 5688, 5978, 6144, 6437, 6596, 6847, 7162, 7…

## $ sr <dbl> 24.3, 24.5, 23.3, 22.9, 25.2, 25.2, 26.7, 25.6, 25.7, 26.1, 2…The variables from both datasets are in the joined data.

Contrast this to semi_join():

gas_pwt_semi <- gasoline %>%

semi_join(pwt, by = c("country", "year"))

glimpse(gas_pwt_semi)## Rows: 285

## Columns: 6

## $ country <chr> "austria", "austria", "austria", "austria", "austria", "austr…

## $ year <int> 1960, 1961, 1962, 1963, 1964, 1965, 1966, 1967, 1968, 1969, 1…

## $ lgaspcar <dbl> 4.173244, 4.100989, 4.073177, 4.059509, 4.037689, 4.033983, 4…

## $ lincomep <dbl> -6.474277, -6.426006, -6.407308, -6.370679, -6.322247, -6.294…

## $ lrpmg <dbl> -0.3345476, -0.3513276, -0.3795177, -0.4142514, -0.4453354, -…

## $ lcarpcap <dbl> -9.766840, -9.608622, -9.457257, -9.343155, -9.237739, -9.123…gas_pwt_semi %>%

tabyl(country)## country n percent

## austria 19 0.06666667

## belgium 19 0.06666667

## canada 19 0.06666667

## denmark 19 0.06666667

## france 19 0.06666667

## greece 19 0.06666667

## ireland 19 0.06666667

## italy 19 0.06666667

## japan 19 0.06666667

## norway 19 0.06666667

## spain 19 0.06666667

## sweden 19 0.06666667

## turkey 19 0.06666667

## u.k. 19 0.06666667

## u.s.a. 19 0.06666667Only columns of gasoline are returned, and only rows of gasoline that were matched with rows

from pwt. semi_join() is not a commutative operation:

pwt_gas_semi <- pwt %>%

semi_join(gasoline, by = c("country", "year"))

glimpse(pwt_gas_semi)## Rows: 285

## Columns: 7

## $ year <int> 1960, 1961, 1962, 1963, 1964, 1965, 1966, 1967, 1968, 1969, 19…

## $ country <chr> "canada", "canada", "canada", "canada", "canada", "canada", "c…

## $ opec <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no…

## $ com <fct> no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no…

## $ pop <int> 17910, 18270, 18614, 18963, 19326, 19678, 20049, 20411, 20744,…

## $ gdp <int> 7258, 7261, 7605, 7876, 8244, 8664, 9093, 9231, 9582, 9975, 10…

## $ sr <dbl> 22.7, 21.5, 22.1, 21.9, 22.9, 24.8, 25.4, 23.1, 22.6, 23.4, 21…gas_pwt_semi %>%

tabyl(country)## country n percent

## austria 19 0.06666667

## belgium 19 0.06666667

## canada 19 0.06666667

## denmark 19 0.06666667

## france 19 0.06666667

## greece 19 0.06666667

## ireland 19 0.06666667

## italy 19 0.06666667

## japan 19 0.06666667

## norway 19 0.06666667

## spain 19 0.06666667

## sweden 19 0.06666667

## turkey 19 0.06666667

## u.k. 19 0.06666667

## u.s.a. 19 0.06666667The rows are the same, but not the columns.

left_join() and right_join() return all the rows from either the dataset that is on the

“left” (the first argument of the fonction) or on the “right” (the second argument of the

function) but all columns from both datasets. So depending on which countries you’re interested in,

you’re going to use either one of these functions:

gas_pwt_left <- gasoline %>%

left_join(pwt, by = c("country", "year"))

gas_pwt_left %>%

tabyl(country)## country n percent

## austria 19 0.05555556

## belgium 19 0.05555556

## canada 19 0.05555556

## denmark 19 0.05555556

## france 19 0.05555556

## germany 19 0.05555556

## greece 19 0.05555556

## ireland 19 0.05555556

## italy 19 0.05555556

## japan 19 0.05555556

## netherla 19 0.05555556

## norway 19 0.05555556

## spain 19 0.05555556

## sweden 19 0.05555556

## switzerl 19 0.05555556

## turkey 19 0.05555556

## u.k. 19 0.05555556

## u.s.a. 19 0.05555556gas_pwt_right <- gasoline %>%

right_join(pwt, by = c("country", "year"))

gas_pwt_right %>%

tabyl(country) %>%

head()## country n percent

## algeria 26 0.008

## angola 26 0.008

## argentina 26 0.008

## australia 26 0.008

## austria 26 0.008

## bangladesh 26 0.008The last merge function is anti_join():

gas_pwt_anti <- gasoline %>%

anti_join(pwt, by = c("country", "year"))

glimpse(gas_pwt_anti)## Rows: 57

## Columns: 6

## $ country <chr> "germany", "germany", "germany", "germany", "germany", "germa…

## $ year <int> 1960, 1961, 1962, 1963, 1964, 1965, 1966, 1967, 1968, 1969, 1…

## $ lgaspcar <dbl> 3.916953, 3.885345, 3.871484, 3.848782, 3.868993, 3.861049, 3…

## $ lincomep <dbl> -6.159837, -6.120923, -6.094258, -6.068361, -6.013442, -5.966…

## $ lrpmg <dbl> -0.1859108, -0.2309538, -0.3438417, -0.3746467, -0.3996526, -…

## $ lcarpcap <dbl> -9.342481, -9.183841, -9.037280, -8.913630, -8.811013, -8.711…gas_pwt_anti %>%

tabyl(country)## country n percent

## germany 19 0.3333333

## netherla 19 0.3333333

## switzerl 19 0.3333333gas_pwt_anti has the columns the gasoline dataset as well as the only country from gasoline

that is not in pwt: “germany”.

That was it for the basic {dplyr} verbs. Next, we’re going to learn about {tidyr}.

4.4 Reshaping and sprucing up data with {tidyr}

Note: this section is going to be a lot harder than anything you’ve seen until now. Reshaping data is tricky, and to really grok it, you need time, and you need to run each line, and see what happens. Take your time, and don’t be discouraged.

Another important package from the {tidyverse} that goes hand in hand with {dplyr} is {tidyr}.

{tidyr} is the package you need when it’s time to reshape data.

I will start by presenting pivot_wider() and pivot_longer().

4.4.1 pivot_wider() and pivot_longer()

Let’s first create a fake dataset:

library(tidyr)survey_data <- tribble(

~id, ~variable, ~value,

1, "var1", 1,

1, "var2", 0.2,

NA, "var3", 0.3,

2, "var1", 1.4,

2, "var2", 1.9,

2, "var3", 4.1,

3, "var1", 0.1,

3, "var2", 2.8,

3, "var3", 8.9,

4, "var1", 1.7,

NA, "var2", 1.9,

4, "var3", 7.6

)

head(survey_data)## # A tibble: 6 × 3

## id variable value

## <dbl> <chr> <dbl>

## 1 1 var1 1

## 2 1 var2 0.2

## 3 NA var3 0.3

## 4 2 var1 1.4

## 5 2 var2 1.9

## 6 2 var3 4.1I used the tribble() function from the {tibble} package to create this fake dataset.

I’ll discuss this package later, for now, let’s focus on {tidyr}.

Let’s suppose that we need the data to be in the wide format which means var1, var2 and var3

need to be their own columns. To do this, we need to use the pivot_wider() function. Why wide?

Because the data set will be wide, meaning, having more columns than rows.

survey_data %>%

pivot_wider(id_cols = id,

names_from = variable,

values_from = value)## # A tibble: 5 × 4

## id var1 var2 var3

## <dbl> <dbl> <dbl> <dbl>

## 1 1 1 0.2 NA

## 2 NA NA 1.9 0.3

## 3 2 1.4 1.9 4.1

## 4 3 0.1 2.8 8.9

## 5 4 1.7 NA 7.6Let’s go through pivot_wider()’s arguments: the first is id_cols = which requires the variable

that uniquely identifies the rows to be supplied. names_from = is where you input the variable that will

generate the names of the new columns. In our case, the variable colmuns has three values; var1,

var2 and var3, and these are now the names of the new columns. Finally, values_from = is where

you can specify the column containing the values that will fill the data frame.

I find the argument names names_from = and values_from = quite explicit.

As you can see, there are some missing values. Let’s suppose that we know that these missing values

are true 0’s. pivot_wider() has an argument called values_fill = that makes it easy to replace

the missing values:

survey_data %>%

pivot_wider(id_cols = id,

names_from = variable,

values_from = value,

values_fill = list(value = 0))## # A tibble: 5 × 4

## id var1 var2 var3

## <dbl> <dbl> <dbl> <dbl>

## 1 1 1 0.2 0

## 2 NA 0 1.9 0.3

## 3 2 1.4 1.9 4.1

## 4 3 0.1 2.8 8.9

## 5 4 1.7 0 7.6A list of variables and their respective values to replace NA’s with must be supplied to values_fill.

Let’s now use another dataset, which you can get from

here

(downloaded from: http://www.statistiques.public.lu/stat/TableViewer/tableView.aspx?ReportId=12950&IF_Language=eng&MainTheme=2&FldrName=3&RFPath=91). This data set gives the unemployment rate for each Luxembourguish

canton from 2001 to 2015. We will come back to this data later on to learn how to plot it. For now,

let’s use it to learn more about {tidyr}.

unemp_lux_data <- rio::import(

"https://raw.githubusercontent.com/b-rodrigues/modern_R/master/datasets/unemployment/all/unemployment_lux_all.csv"

)

head(unemp_lux_data)## division year active_population of_which_non_wage_earners

## 1 Beaufort 2001 688 85

## 2 Beaufort 2002 742 85

## 3 Beaufort 2003 773 85

## 4 Beaufort 2004 828 80

## 5 Beaufort 2005 866 96

## 6 Beaufort 2006 893 87

## of_which_wage_earners total_employed_population unemployed

## 1 568 653 35

## 2 631 716 26

## 3 648 733 40

## 4 706 786 42

## 5 719 815 51

## 6 746 833 60

## unemployment_rate_in_percent

## 1 5.09

## 2 3.50

## 3 5.17

## 4 5.07

## 5 5.89

## 6 6.72Now, let’s suppose that for our purposes, it would make more sense to have the data in a wide format,

where columns are “divison times year” and the value is the unemployment rate. This can be easily done

with providing more columns to names_from =.

unemp_lux_data2 <- unemp_lux_data %>%

filter(year %in% seq(2013, 2017),

str_detect(division, ".*ange$"),

!str_detect(division, ".*Canton.*")) %>%

select(division, year, unemployment_rate_in_percent) %>%

rowid_to_column()

unemp_lux_data2 %>%

pivot_wider(names_from = c(division, year),

values_from = unemployment_rate_in_percent)## # A tibble: 48 × 49

## rowid Bertr…¹ Bertr…² Bertr…³ Diffe…⁴ Diffe…⁵ Diffe…⁶ Dudel…⁷ Dudel…⁸ Dudel…⁹

## <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 5.69 NA NA NA NA NA NA NA NA

## 2 2 NA 5.65 NA NA NA NA NA NA NA

## 3 3 NA NA 5.35 NA NA NA NA NA NA

## 4 4 NA NA NA 13.2 NA NA NA NA NA

## 5 5 NA NA NA NA 12.6 NA NA NA NA

## 6 6 NA NA NA NA NA 11.4 NA NA NA

## 7 7 NA NA NA NA NA NA 9.35 NA NA

## 8 8 NA NA NA NA NA NA NA 9.37 NA

## 9 9 NA NA NA NA NA NA NA NA 8.53

## 10 10 NA NA NA NA NA NA NA NA NA

## # … with 38 more rows, 39 more variables: Frisange_2013 <dbl>,

## # Frisange_2014 <dbl>, Frisange_2015 <dbl>, Hesperange_2013 <dbl>,

## # Hesperange_2014 <dbl>, Hesperange_2015 <dbl>, Leudelange_2013 <dbl>,

## # Leudelange_2014 <dbl>, Leudelange_2015 <dbl>, Mondercange_2013 <dbl>,

## # Mondercange_2014 <dbl>, Mondercange_2015 <dbl>, Pétange_2013 <dbl>,

## # Pétange_2014 <dbl>, Pétange_2015 <dbl>, Rumelange_2013 <dbl>,

## # Rumelange_2014 <dbl>, Rumelange_2015 <dbl>, Schifflange_2013 <dbl>, …In the filter() statement, I only kept data from 2013 to 2017, “division”s ending with the string

“ange” (“division” can be a canton or a commune, for example “Canton Redange”, a canton, or

“Hesperange” a commune), and removed the cantons as I’m only interested in communes. If you don’t

understand this filter() statement, don’t fret; this is not important for what follows. I then

only kept the columns I’m interested in and pivoted the data to a wide format. Also, I needed to

add a unique identifier to the data frame. For this, I used rowid_to_column() function, from the

{tibble} package, which adds a new column to the data frame with an id, going from 1 to the

number of rows in the data frame. If I did not add this identifier, the statement would work still:

unemp_lux_data3 <- unemp_lux_data %>%

filter(year %in% seq(2013, 2017), str_detect(division, ".*ange$"), !str_detect(division, ".*Canton.*")) %>%

select(division, year, unemployment_rate_in_percent)

unemp_lux_data3 %>%

pivot_wider(names_from = c(division, year), values_from = unemployment_rate_in_percent)## # A tibble: 1 × 48

## Bertrange_2013 Bertr…¹ Bertr…² Diffe…³ Diffe…⁴ Diffe…⁵ Dudel…⁶ Dudel…⁷ Dudel…⁸

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 5.69 5.65 5.35 13.2 12.6 11.4 9.35 9.37 8.53

## # … with 39 more variables: Frisange_2013 <dbl>, Frisange_2014 <dbl>,

## # Frisange_2015 <dbl>, Hesperange_2013 <dbl>, Hesperange_2014 <dbl>,

## # Hesperange_2015 <dbl>, Leudelange_2013 <dbl>, Leudelange_2014 <dbl>,

## # Leudelange_2015 <dbl>, Mondercange_2013 <dbl>, Mondercange_2014 <dbl>,

## # Mondercange_2015 <dbl>, Pétange_2013 <dbl>, Pétange_2014 <dbl>,

## # Pétange_2015 <dbl>, Rumelange_2013 <dbl>, Rumelange_2014 <dbl>,

## # Rumelange_2015 <dbl>, Schifflange_2013 <dbl>, Schifflange_2014 <dbl>, …and actually look even better, but only because there are no repeated values; there is only one unemployment rate for each “commune times year”. I will come back to this later on, with another example that might be clearer. These last two code blocks are intense; make sure you go through each lien step by step and understand what is going on.

You might have noticed that because there is no data for the years 2016 and 2017, these columns do

not appear in the data. But suppose that we need to have these columns, so that a colleague from

another department can fill in the values. This is possible by providing a data frame with the

detailed specifications of the result data frame. This optional data frame must have at least two

columns, .name, which are the column names you want, and .value which contains the values.

Also, the function that uses this spec is a pivot_wider_spec(), and not pivot_wider().

unemp_spec <- unemp_lux_data %>%

tidyr::expand(division,

year = c(year, 2016, 2017),

.value = "unemployment_rate_in_percent") %>%

unite(".name", division, year, remove = FALSE)

unemp_specHere, I use another function, tidyr::expand(), which returns every combinations (cartesian product)

of every variable from a dataset.

To make it work, we still need to create a column that uniquely identifies each row in the data:

unemp_lux_data4 <- unemp_lux_data %>%

select(division, year, unemployment_rate_in_percent) %>%

rowid_to_column() %>%

pivot_wider_spec(spec = unemp_spec)

unemp_lux_data4## # A tibble: 1,770 × 2,007

## rowid Beauf…¹ Beauf…² Beauf…³ Beauf…⁴ Beauf…⁵ Beauf…⁶ Beauf…⁷ Beauf…⁸ Beauf…⁹

## <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 5.09 NA NA NA NA NA NA NA NA

## 2 2 NA 3.5 NA NA NA NA NA NA NA

## 3 3 NA NA 5.17 NA NA NA NA NA NA

## 4 4 NA NA NA 5.07 NA NA NA NA NA

## 5 5 NA NA NA NA 5.89 NA NA NA NA

## 6 6 NA NA NA NA NA 6.72 NA NA NA

## 7 7 NA NA NA NA NA NA 4.3 NA NA

## 8 8 NA NA NA NA NA NA NA 7.08 NA

## 9 9 NA NA NA NA NA NA NA NA 8.52

## 10 10 NA NA NA NA NA NA NA NA NA

## # … with 1,760 more rows, 1,997 more variables: Beaufort_2010 <dbl>,

## # Beaufort_2011 <dbl>, Beaufort_2012 <dbl>, Beaufort_2013 <dbl>,

## # Beaufort_2014 <dbl>, Beaufort_2015 <dbl>, Beaufort_2016 <dbl>,

## # Beaufort_2017 <dbl>, Bech_2001 <dbl>, Bech_2002 <dbl>, Bech_2003 <dbl>,

## # Bech_2004 <dbl>, Bech_2005 <dbl>, Bech_2006 <dbl>, Bech_2007 <dbl>,

## # Bech_2008 <dbl>, Bech_2009 <dbl>, Bech_2010 <dbl>, Bech_2011 <dbl>,

## # Bech_2012 <dbl>, Bech_2013 <dbl>, Bech_2014 <dbl>, Bech_2015 <dbl>, …You can notice that now we have columns for 2016 and 2017 too. Let’s clean the data a little bit more:

unemp_lux_data4 %>%

select(-rowid) %>%

fill(matches(".*"), .direction = "down") %>%

slice(n())## # A tibble: 1 × 2,006

## Beaufort_2001 Beaufo…¹ Beauf…² Beauf…³ Beauf…⁴ Beauf…⁵ Beauf…⁶ Beauf…⁷ Beauf…⁸

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 5.09 3.5 5.17 5.07 5.89 6.72 4.3 7.08 8.52

## # … with 1,997 more variables: Beaufort_2010 <dbl>, Beaufort_2011 <dbl>,

## # Beaufort_2012 <dbl>, Beaufort_2013 <dbl>, Beaufort_2014 <dbl>,

## # Beaufort_2015 <dbl>, Beaufort_2016 <dbl>, Beaufort_2017 <dbl>,

## # Bech_2001 <dbl>, Bech_2002 <dbl>, Bech_2003 <dbl>, Bech_2004 <dbl>,

## # Bech_2005 <dbl>, Bech_2006 <dbl>, Bech_2007 <dbl>, Bech_2008 <dbl>,

## # Bech_2009 <dbl>, Bech_2010 <dbl>, Bech_2011 <dbl>, Bech_2012 <dbl>,

## # Bech_2013 <dbl>, Bech_2014 <dbl>, Bech_2015 <dbl>, Bech_2016 <dbl>, …We will learn about fill(), anoher {tidyr} function a bit later in this chapter, but its basic

purpose is to fill rows with whatever value comes before or after the missing values. slice(n())

then only keeps the last row of the data frame, which is the row that contains all the values (expect

for 2016 and 2017, which has missing values, as we wanted).

Here is another example of the importance of having an identifier column when using a spec:

data(mtcars)

mtcars_spec <- mtcars %>%

tidyr::expand(am, cyl, .value = "mpg") %>%

unite(".name", am, cyl, remove = FALSE)

mtcars_specWe can now transform the data:

mtcars %>%

pivot_wider_spec(spec = mtcars_spec)## # A tibble: 32 × 14

## disp hp drat wt qsec vs gear carb `0_4` `0_6` `0_8` `1_4` `1_6`

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 160 110 3.9 2.62 16.5 0 4 4 NA NA NA NA 21

## 2 160 110 3.9 2.88 17.0 0 4 4 NA NA NA NA 21

## 3 108 93 3.85 2.32 18.6 1 4 1 NA NA NA 22.8 NA

## 4 258 110 3.08 3.22 19.4 1 3 1 NA 21.4 NA NA NA

## 5 360 175 3.15 3.44 17.0 0 3 2 NA NA 18.7 NA NA

## 6 225 105 2.76 3.46 20.2 1 3 1 NA 18.1 NA NA NA

## 7 360 245 3.21 3.57 15.8 0 3 4 NA NA 14.3 NA NA

## 8 147. 62 3.69 3.19 20 1 4 2 24.4 NA NA NA NA

## 9 141. 95 3.92 3.15 22.9 1 4 2 22.8 NA NA NA NA

## 10 168. 123 3.92 3.44 18.3 1 4 4 NA 19.2 NA NA NA

## # … with 22 more rows, and 1 more variable: `1_8` <dbl>As you can see, there are several values of “mpg” for some combinations of “am” times “cyl”. If we remove the other columns, each row will not be uniquely identified anymore. This results in a warning message, and a tibble that contains list-columns:

mtcars %>%

select(am, cyl, mpg) %>%

pivot_wider_spec(spec = mtcars_spec)## Warning: Values from `mpg` are not uniquely identified; output will contain list-cols.

## * Use `values_fn = list` to suppress this warning.

## * Use `values_fn = {summary_fun}` to summarise duplicates.

## * Use the following dplyr code to identify duplicates.

## {data} %>%

## dplyr::group_by(am, cyl) %>%

## dplyr::summarise(n = dplyr::n(), .groups = "drop") %>%

## dplyr::filter(n > 1L)## # A tibble: 1 × 6

## `0_4` `0_6` `0_8` `1_4` `1_6` `1_8`

## <list> <list> <list> <list> <list> <list>

## 1 <dbl [3]> <dbl [4]> <dbl [12]> <dbl [8]> <dbl [3]> <dbl [2]>We are going to learn about list-columns in the next section. List-columns are very powerful, and mastering them will be important. But generally speaking, when reshaping data, if you get list-columns back it often means that something went wrong.

So you have to be careful with this.

pivot_longer() is used when you need to go from a wide to a long dataset, meaning, a dataset

where there are some columns that should not be columns, but rather, the levels of a factor

variable. Let’s suppose that the “am” column is split into two columns, 1 for automatic and 0

for manual transmissions, and that the values filling these colums are miles per gallon, “mpg”:

mtcars_wide_am <- mtcars %>%

pivot_wider(names_from = am, values_from = mpg)

mtcars_wide_am %>%

select(`0`, `1`, everything())## # A tibble: 32 × 11

## `0` `1` cyl disp hp drat wt qsec vs gear carb

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 NA 21 6 160 110 3.9 2.62 16.5 0 4 4

## 2 NA 21 6 160 110 3.9 2.88 17.0 0 4 4

## 3 NA 22.8 4 108 93 3.85 2.32 18.6 1 4 1

## 4 21.4 NA 6 258 110 3.08 3.22 19.4 1 3 1

## 5 18.7 NA 8 360 175 3.15 3.44 17.0 0 3 2

## 6 18.1 NA 6 225 105 2.76 3.46 20.2 1 3 1

## 7 14.3 NA 8 360 245 3.21 3.57 15.8 0 3 4

## 8 24.4 NA 4 147. 62 3.69 3.19 20 1 4 2

## 9 22.8 NA 4 141. 95 3.92 3.15 22.9 1 4 2

## 10 19.2 NA 6 168. 123 3.92 3.44 18.3 1 4 4

## # … with 22 more rowsAs you can see, the “0” and “1” columns should not be their own columns, unless there is a very specific and good reason they should… but rather, they should be the levels of another column (in our case, “am”).

We can go back to a long dataset like so:

mtcars_wide_am %>%

pivot_longer(cols = c(`1`, `0`), names_to = "am", values_to = "mpg") %>%

select(am, mpg, everything())## # A tibble: 64 × 11

## am mpg cyl disp hp drat wt qsec vs gear carb

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 21 6 160 110 3.9 2.62 16.5 0 4 4

## 2 0 NA 6 160 110 3.9 2.62 16.5 0 4 4

## 3 1 21 6 160 110 3.9 2.88 17.0 0 4 4

## 4 0 NA 6 160 110 3.9 2.88 17.0 0 4 4

## 5 1 22.8 4 108 93 3.85 2.32 18.6 1 4 1

## 6 0 NA 4 108 93 3.85 2.32 18.6 1 4 1

## 7 1 NA 6 258 110 3.08 3.22 19.4 1 3 1

## 8 0 21.4 6 258 110 3.08 3.22 19.4 1 3 1

## 9 1 NA 8 360 175 3.15 3.44 17.0 0 3 2

## 10 0 18.7 8 360 175 3.15 3.44 17.0 0 3 2

## # … with 54 more rowsIn the cols argument, you need to list all the variables that need to be transformed. Only 1 and

0 must be pivoted, so I list them. Just for illustration purposes, imagine that we would need

to pivot 50 columns. It would be faster to list the columns that do not need to be pivoted. This

can be achieved by listing the columns that must be excluded with - in front, and maybe using

match() with a regular expression:

mtcars_wide_am %>%

pivot_longer(cols = -matches("^[[:alpha:]]"),

names_to = "am",

values_to = "mpg") %>%

select(am, mpg, everything())## # A tibble: 64 × 11

## am mpg cyl disp hp drat wt qsec vs gear carb

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 21 6 160 110 3.9 2.62 16.5 0 4 4

## 2 0 NA 6 160 110 3.9 2.62 16.5 0 4 4

## 3 1 21 6 160 110 3.9 2.88 17.0 0 4 4

## 4 0 NA 6 160 110 3.9 2.88 17.0 0 4 4

## 5 1 22.8 4 108 93 3.85 2.32 18.6 1 4 1

## 6 0 NA 4 108 93 3.85 2.32 18.6 1 4 1

## 7 1 NA 6 258 110 3.08 3.22 19.4 1 3 1

## 8 0 21.4 6 258 110 3.08 3.22 19.4 1 3 1

## 9 1 NA 8 360 175 3.15 3.44 17.0 0 3 2

## 10 0 18.7 8 360 175 3.15 3.44 17.0 0 3 2

## # … with 54 more rowsEvery column that starts with a letter is ok, so there is no need to pivot them. I use the match()

function with a regular expression so that I don’t have to type the names of all the columns. select()

is used to re-order the columns, only for viewing purposes

names_to = takes a string as argument, which will be the name of the name column containing the

levels 0 and 1, and values_to = also takes a string as argument, which will be the name of

the column containing the values. Finally, you can see that there are a lot of NAs in the

output. These can be removed easily:

mtcars_wide_am %>%

pivot_longer(cols = c(`1`, `0`), names_to = "am", values_to = "mpg", values_drop_na = TRUE) %>%

select(am, mpg, everything())## # A tibble: 32 × 11

## am mpg cyl disp hp drat wt qsec vs gear carb

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 21 6 160 110 3.9 2.62 16.5 0 4 4

## 2 1 21 6 160 110 3.9 2.88 17.0 0 4 4

## 3 1 22.8 4 108 93 3.85 2.32 18.6 1 4 1

## 4 0 21.4 6 258 110 3.08 3.22 19.4 1 3 1

## 5 0 18.7 8 360 175 3.15 3.44 17.0 0 3 2

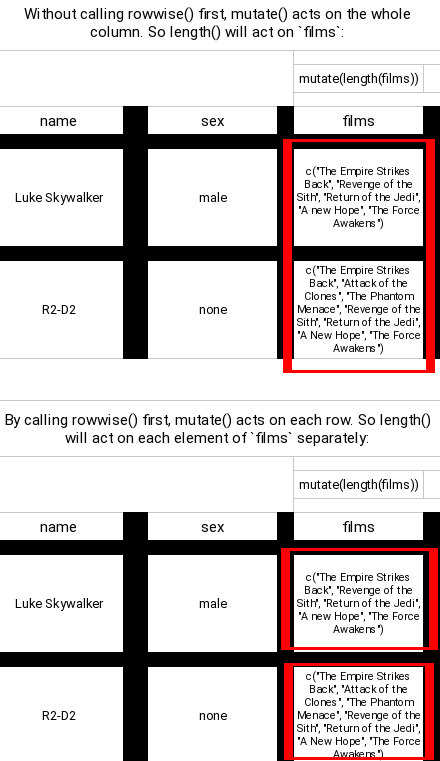

## 6 0 18.1 6 225 105 2.76 3.46 20.2 1 3 1